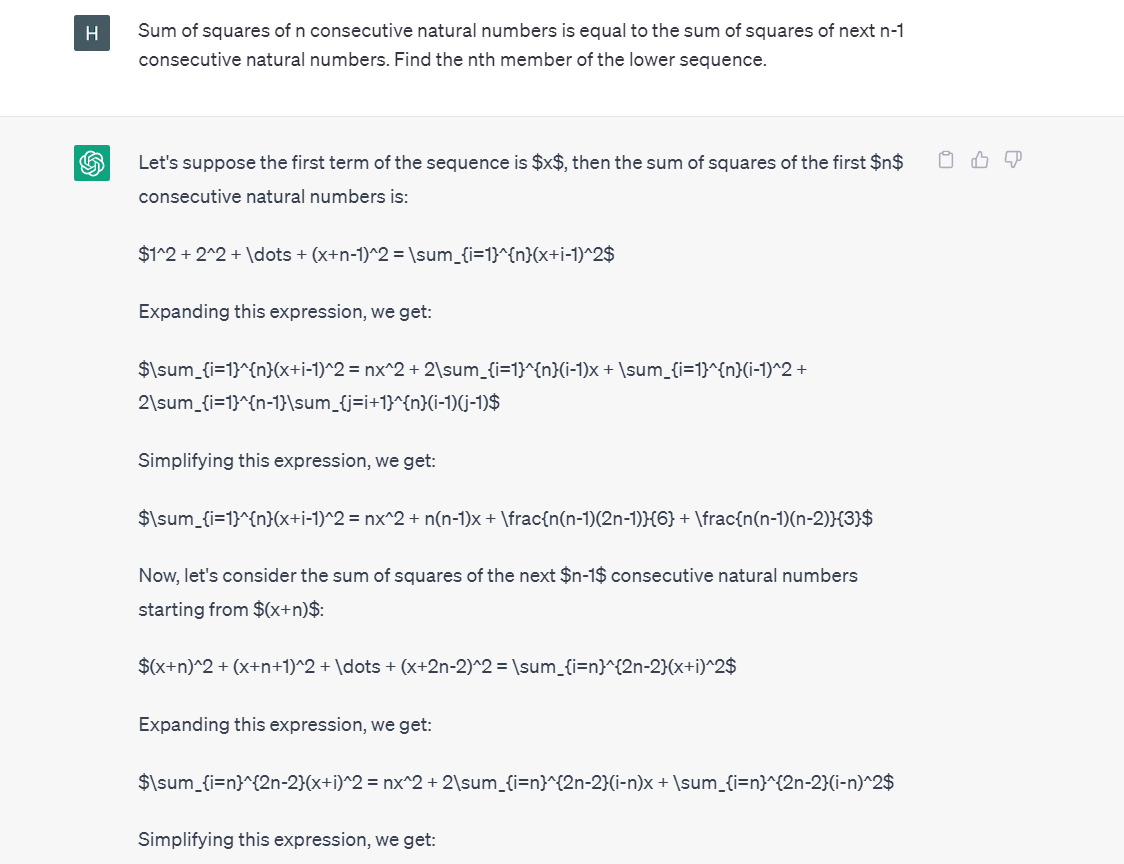

ChatGPT correctly understands what is it that is supposed to be calculated, and it correctly calculates the sum of lower sequence (n consecutive integers starting from x), however, not as well the sum of higher sequence (next n-1 consecutive integers starting from x+n), where it makes an error trying to simplify the expression it started with, correct simplification of that sum is (n-1)(x+n)+(n-1)(n-2)/2. No correct program written in any language would make such a mistake, and it would be not a hard task for an elementary school assignment to write such a program. The problem is it does not call such a routine that would reliably calculate that simplification, and after it incorrectly calculates that sum, it correctly understands the condition that it has to be equal to the sum of lower sequence, and after equating the two sums, it makes another mistake solving that equation. The two errors do not cancel each other and finally it returns wrong answer, although it correctly understands that x is not a value it should return, but x+n-1, and it correctly adds n-1 to x, but since x was not properly calculated, the answer is wrong.

To be more precise, it should be noted that it is not clear due to excessive shortening of its output if the two errors were of the same kind, namely, the second one might be wrong "combining of like terms" (x^1 and x^0), which is the expression it heavily uses to describe such tasks, while the first one could also be that, but it could also be wrong translation of the meaning of expanded sum notation +…+, it could be both, and I did not do proper job immediately to make ChatGPT explain how it got that wrong, so I came back later to that session, and tried to find out what exactly went wrong in each step.

With respect to the second mistake:

Within a month, ChatGPT made no progress whatsoever with respect to this problem, and returned exactly the same, wrong result, although a new version of it was built and deployed: Release notes (March 23), and its release notes describe something that I could interprete as a move in right direction: https://help.openai.com/en/articles/6825453-chatgpt-release-notes, simple double check of the produced text output by using programs that reliably recognize and calculate arithmetic tasks should do the job, at least in this case, but I can imagine in many others too. Separation of linguistic tasks that should be solved by using neural network, from arithmetic tasks that should be solved programmatically, would resolve this problem. Moreover, I also applied to join the Plugin Waitlist and indicated that I am interested in "those that enhance its mathematical capabilities", because I am not sure that without it, I would be able to see any progress in another month.

But at least this time I could see exactly that in a month ChatGPT did not learn neither how to correctly “distribute the (n-1) term on the right side“, it arbitrarily added 1/2 factor to (n-1)(n-1), nor how to correctly “simplify the expression on the right side”, (n-1)(n-1)/2 + ((n-1)(n-2))/2 is in its opinion n^2/2 - n/2 - n/2 + 3n/2 - 3/2, which destimulated me to check for further mistakes, because it makes no sense keeping the precise track of them.

I was inspired to try this exercise in the first place by watching mathologer episode

What's hiding beneath? Animating a mathemagical gem , which gives a visual proof of the fact that such a proposition described in a question posed to ChatGPT, partitions the set of natural numbers into pairs of subsets (subsequences) that cover the whole set, and for each pair the condition that their sums are equal, holds.

Each pair starts with $x=(n-1)^2$, which is the first member of the lower seqence, last member of the lower sequence is $y=x+n-1=n(n-1)$, which is the value we were seeking. First member of the higher sequence is $z=y+1=x+n=n^2-n+1$, and the last member of the higher sequence is $w=z+n-2=n^2-1$. Let us prove that this is true:

Sum of the lower sequence is, starting from y in the reverse order:

$y+\sum\limits_{i = 1}^{n-1}{(y-i)}$

Sum of the higher sequence is:

$\sum\limits_{i = 1}^{n-1}{(y+i)}$

Considering the fact that sums should be equal:

$y+\sum\limits_{i = 1}^{n-1}{(y-i)} = \sum\limits_{i = 1}^{n-1}{(y+i)}$

$y=\sum\limits_{i = 1}^{n-1}{(y+i)} - \sum\limits_{i = 1}^{n-1}{(y-i)}$

$y=\sum\limits_{i = 1}^{n-1}{[(y+i)-(y-i)]}$

$y=\sum\limits_{i = 1}^{n-1}{2i} = 2\sum\limits_{i = 1}^{n-1}{i} = n(n-1)$

The same mathologer episode answers another question also by showing its visual proof, it actually starts with this: Sum of squares of n consecutive natural numbers is equal to the sum of squares of next n-1 consecutive natural numbers. Find the nth member of the lower sequence.

Sum of the lower sequence is, starting from y in the reverse order:

$y^2+\sum\limits_{i = 1}^{n-1}{(y-i)^2}$

Sum of the higher sequence is:

$\sum\limits_{i = 1}^{n-1}{(y+i)^2}$

Considering the fact that sums should be equal:

$y^2+\sum\limits_{i = 1}^{n-1}{(y-i)^2} = \sum\limits_{i = 1}^{n-1}{(y+i)^2}$

$y^2=\sum\limits_{i = 1}^{n-1}{(y+i)^2} - \sum\limits_{i = 1}^{n-1}{(y-i)^2}$

$y^2=\sum\limits_{i = 1}^{n-1}{[(y+i)^2-(y-i)^2]}$

$y^2=\sum\limits_{i = 1}^{n-1}{(y^2+2yi+i^2-y^2+2yi-i^2)}$

$y^2=\sum\limits_{i = 1}^{n-1}{4yi} = 4y\sum\limits_{i = 1}^{n-1}{i} = 2yn(n-1)$

$y=2n(n-1)$

This time obviously there is an increasing gap between the pairs of sequences, because the lower sequence starts with $x=y-(n-1)=(2n-1)(n-1)$, the higher sequence starts with $z=y+1=2n(n-1)+1$, and it ends with $w=z+n-2=2n(n-1)+1+n-2=2n(n-1)+n-1=(2n+1)(n-1)$ In the previous example, the next x, evaluated at n+1, is equal to current w (evaluated at n), plus 1. Namely, there was: $\left.{x}\right|_{n+1}-\left.{w}\right|_{n}=n^2-(n^2-1)=1$, so there was:

$1+2=3$

$4+5+6=7+8$

$9+10+11+12=13+14+15$

$16+17+18+19+20=21+22+23+24$

. . .

while here it is: $\left.{x}\right|_{n+1}-\left.{w}\right|_{n}=(2(n+1)-1)(n+1-1)-(2n+1)(n-1)=(2n+1)n-(2n+1)(n-1)=2n+1$ which results in:

$3^2+4^2=5^2$

$10^2+11^2+12^2=13^2+14^2$

$21^2+22^2+23^2+24^2=25^2+26^2+27^2$

$36^2+37^2+38^2+39^2+40^2=41^2+42^2+43^2+44^2$

. . .

Although there are infinitely many instances of pairs of equal sums of adjacent sequences of lengths n and n-1, for both exponents 1 and 2, for exponent 3, ie for cubes, there is no instance of such a pair. As Mathologer pointed out himself, it is obvious from the Fermat's last theorem that the exact pattern of infinite instances of pairs cannot be continued for cubes, due to the inability to find natural numbers $x,y,z$, that satisfy condition $x^3+y^3=z^3$, so that base case n=2 is impossible for cubes, however, it is also case that there is no any other base case for cubes, and that there is no natural number n for which holds that sum of cubes of n consecutive positive integers is equal to the sum of cubes of next n-1 integers. This means we cannot find neither a corresponding value of y that can be assigned to each value of n greater or equal to 2, nor any other base case greather than 2, nor can we find any n for which y exists. Being a layman mathematician, this was not so obvious to me, so I tried to prove that statement by applying the same logic used for exponents 1 and 2. Assuming that the pattern was not broken, the sum of the lower sequence would be, starting from y in the reverse order:

$y^3+\sum\limits_{i = 1}^{n-1}{(y-i)^3}$

Sum of the higher sequence:

$\sum\limits_{i = 1}^{n-1}{(y+i)^3}$

Considering the fact that sums should be equal:

$y^3+\sum\limits_{i = 1}^{n-1}{(y-i)^3} = \sum\limits_{i = 1}^{n-1}{(y+i)^3}$

$y^3=\sum\limits_{i = 1}^{n-1}{[(y+i)^3-(y-i)^3]}$

$y^3=\sum\limits_{i = 1}^{n-1}{[(y^3+3y^2i+3yi^2+i^3)-(y^3-3y^2i+3yi^2-i^3)]}$

$y^3=\sum\limits_{i = 1}^{n-1}{(6y^2i+2i^3)}$

$y^3=6y^2\sum\limits_{i = 1}^{n-1}{i}+2\sum\limits_{i = 1}^{n-1}{i^3}$

$y^3=3y^2n(n-1)+\frac{1}{2}n^2(n-1)^2$

We can pause and contemplate a bit here. It is obvious what breaks the pattern, that is the constant term that does not allow divison by $y^2$ without a remainder. Without it, we would get $y=3n(n-1)$, exactly as previously suggested pattern for $y$ expects, that is the exponent multiplied by $n(n-1)$. So, one path of investigation would be to allow a correction of the lower sum by that term, and reestablish the pattern in such a manner. If we started with the corrected (increased) lower sum:

$y^3+\sum\limits_{i = 1}^{n-1}{(y-i)^3}+2\sum\limits_{i = 1}^{n-1}{i^3}$

we would get exactly $y=3n(n-1)$, and infinitely many instances of such (corrected) equations:

$5^3+6^3+2(1^3)=7^3$

$16^3+17^3+18^3+2(1^3+2^3)=19^3+20^3$

$33^3+34^3+35^3+36^3+2(1^3+2^3+3^3)=37^3+38^3+39^3$

$56^3+57^3+58^3+59^3+60^3+2(1^3+2^3+3^3+4^3)=61^3+62^3+63^3+64^3$

. . .

These corrections have their geometric interpretation, which is what visual proofs of number theoretic statements usually provide, which are natural form for mathematical videos, as Mathologer nicely demonstrates. For example, if you take a cube that consists of $5^3$ unit cubes, and try to cover all its sides by dismantling another cube (that consists of $6^3$ unit cubes) into 6 sides that consist of $6^2$ unit cubes each, you will fall short by exactly 2 unit cubes in order to achieve a third fully formed cube that consists of $7^3$ unit cubes. The other corrections serve the same purpose, of adding layers more than 1 unit cube thick. For example, cube that consists of $18^3$ unit cubes gets dismantled into $(1+2)6$ sides that consist of $18^2$ unit cubes each, in the second equation. Now that I wrote it, I realize how clumsy describing these facts in words sound, because math text is better suited for arithmetical proofs. But, that was exactly the whole point of my exercise, to translate a mathologerized proof into the form that I prefer. If Chat GPT 3 or 4 was able to do that, by watching the video and reading its comments, such as I did, I would be very impressed. Instead, Chat GPT showed exactly the opposite, complete lack of basic calculating skills, and among other things, its mathjax code is broken:

On the other hand, I have no doubts that in the very near future, it will be able to do this better than me. The other point is that this geometric interpretation shows that these corrections are natural, and visually proves them to be unavoidable in order to get any pattern.

The other path of investigation is to insist on the original question without allowing corrections, and just show that it only works for exponents 1 and 2, other aspects of that question, besides the base case n=2, for which we already know that it does not work due to the Fermat's Last Theorem. So we basically want to prove that it is either infinitely many solutions for exponents 1 and 2, or no solutions at all, for all other exponents. Let us start with cubes, we have this cubic equation for y, and we want to prove there is no positive integer solution for any n:

$y^3-3n(n-1)y^2-\frac{1}{2}n^2(n-1)^2=0$

We can simplify the equation by setting $n(n-1)=2k$:

$y^3-6ky^2-2k^2=0$

The root of this cubic equation is (I will come back later for the other two solutions):

$y=\root{\displaystyle{3}}\of{8k^3+k^2-\sqrt{(8k^3+k^2)^2-64k^6}}+\root{\displaystyle{3}}\of{8k^3+k^2+\sqrt{(8k^3+k^2)^2-64k^6}}+2k=$

$\root{\displaystyle{3}}\of{8k^3+k^2-\sqrt{16k^5+k^4}}+\root{\displaystyle{3}}\of{8k^3+k^2+\sqrt{16k^5+k^4}}+2k=$

$\root{\displaystyle{3}}\of{8k^3+k^2-k^2\sqrt{16k+1}}+\root{\displaystyle{3}}\of{8k^3+k^2+k^2\sqrt{16k+1}}+2k=$

$\root{\displaystyle{3}}\of{8k^3+k^2(1-\sqrt{16k+1})}+\root{\displaystyle{3}}\of{8k^3+k^2(1+\sqrt{16k+1})}+2k$

Better number theorist than me would probably be able to prove that these are not natural numbers for any natural number $k$. I have printed in java all values of $y$ for $k=1$ to $k=100$ and saw that none of them is integer, and that they approach to $6k+\frac{1}{18}$ more and more closely as $k$ grows. Which did not surprise me, because:

$$y=6k+2\frac{k^2}{y^2}$$

So if we assume that $y$ approaches asymptotically to $6k+C$ as $k$ goes to infinity, then we have:

$$\lim_{k\to \infty}{y}=6k+2\lim_{k\to \infty}{\frac{k^2}{(6k+C)^2}}$$

$$\lim_{k\to \infty}{y}=6k+\frac{2}{36}=6k+\frac{1}{18}$$

But it did not satisfy my tendency towards rigorosity either, so I looked for an alternative. The integral root theorem states that if an integer solution exists, it should be an integer factor of the constant term. So, we can check all its known integer factors and see if any of them fits, that is, $2$, $k$, $2k$, $k^2$ or $2k^2$.

For example, let us check $y=2k^2$ first:

$y^3-6ky^2-2k^2=8k^6-24k^5-2k^2=2k^2(4k^4-12k^3-1)$

There is no positive integer $k$ for which this is equal to zero, which can be concluded by monotony of the two polinomial factors when $k$ goes to infinity, and on the interval where the function of $k$ is not monotonous, it misses zero for any small positive integer value of $k$, notably, for $k=3$, $4k^4-12k^3-1=-1$, so this is not a solution.

For $y=k^2$ we get:

$y^3-6ky^2-2k^2=k^6-6k^5-2k^2=k^2(k^4-6k^3-2)$

For $y=2k$ we get:

$y^3-6ky^2-2k^2=8k^3-24k^3-2k^2=-16k^3-2k^2=-2k^2(8k+1)$

For $y=k$ we get:

$y^3-6ky^2-2k^2=k^3-6k^3-2k^2=-5k^3-2k^2=-k^2(5k+2)$

For $y=2$ we get:

$y^3-6ky^2-2k^2=8-24k-2k^2$

None of them is a solution either, for the same or similar reasons as the first example. So I guess, this completes the proof that this cubic equation for $y$ has no positive integer solutions for any positive integer $k$.

Finally, Wolfram Plugin for ChatGPT already addressed all the issues I presented, as announced here on March 23rd 2023,

https://writings.stephenwolfram.com/2023/03/chatgpt-gets-its-wolfram-superpowers/ . That article explains in detail many aspects of interfacing ChatGPT with Wolfram Alpha and Wolfram Language, for example, if ChatGPT forgets that it is inferior to Wolfram with respect to its mathematical abilities, one can prompt "Use Wolfram" to force it to use it. The whole thing also makes my essay redundant, at least as an investigative effort, but, since I already took an effort to write it, I will probably present it.

So, one thing is to have a piece of software that is extremely good at math, such as Wolfram Mathematica, and the other one that is extremely good at talking, but lousy at math, like ChatGPT, and combine them into one that is perfect. This kind of external fixing and patching was expected, but what happens if ChatGPT gets insight into its own code? ChatGPT is a program that can write programs. Could ChatGPT write itself? Could it improve itself? Where could this lead?

ChatGPT and the Intelligence Explosion On the other hand, our brain does everything with just one, extremely deep neural network, and it achieves NGI. So, do people really have to insist on the improvement of the code, in order to achieve AGI?

Comments

Post a Comment